What changes when AI is the primary interaction model

In a traditional product, components handle: default, hover, active, disabled, loading, error, success.

In an AI-native product, you need additional states:

- Generating — the model is producing output, character by character or chunk by chunk

- Partial — a useful but incomplete response has arrived

- Uncertain — the model has responded but with low confidence

- Hallucinating — the model has responded with something plausible but wrong (you often cannot know this at render time)

- Context-dependent — the same component renders differently based on what the model knows

A design system that does not account for these states will produce interfaces that feel broken, slow, or deceptive — even when the underlying model is performing well.

Streaming-first components

Streaming is not a loading state. It is a different interaction paradigm.

When an LLM streams a response, the user is reading content as it is being generated. This means:

- Text that grows word by word needs typography that does not reflow jarringly

- Actions that depend on the full response cannot appear until generation is complete

- Users may want to interrupt generation — the stop/cancel action is a first-class interaction, not an edge case

- Partial renders must be useful, not just spinners with text appearing

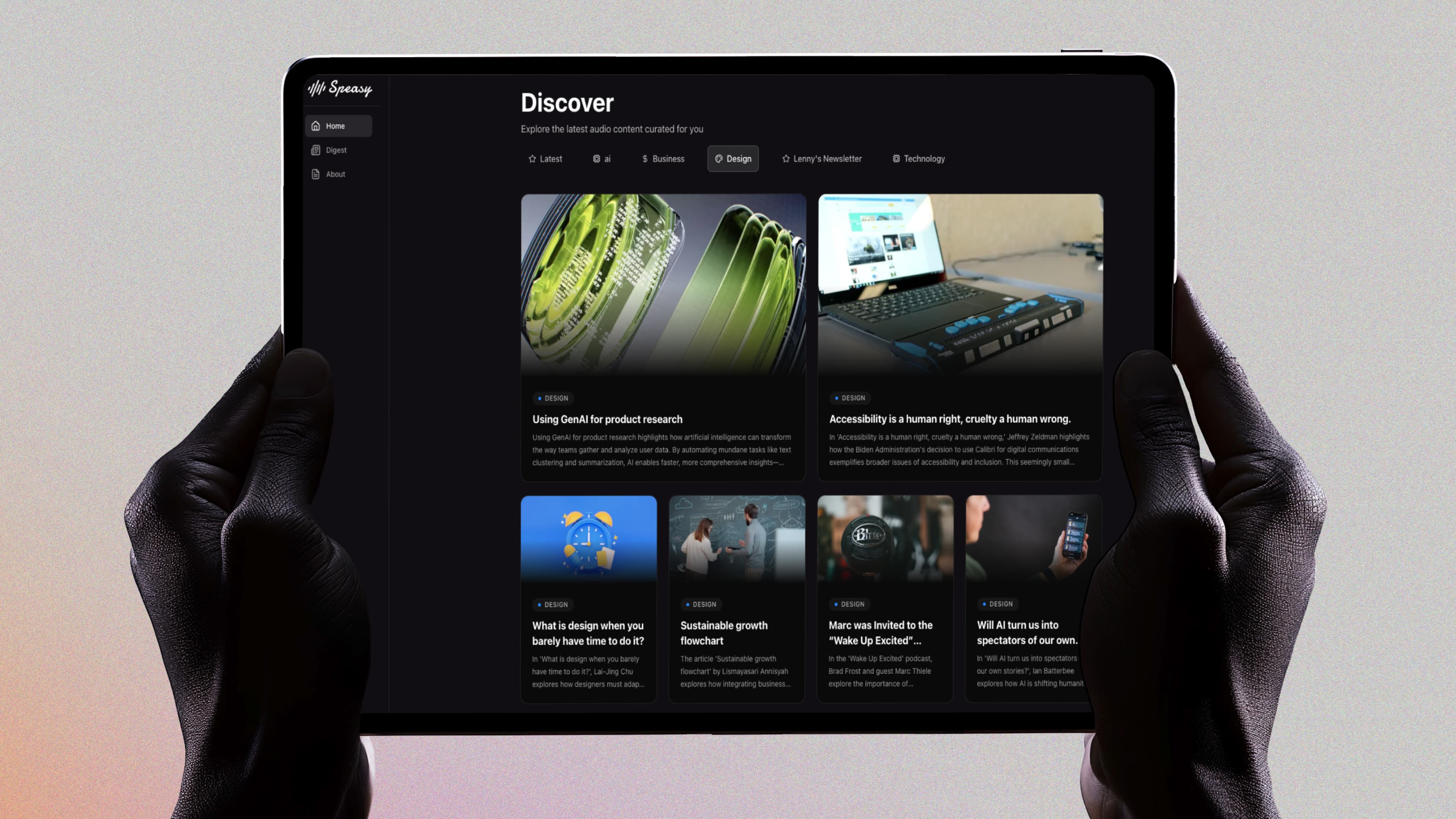

In Speasy, I designed audio generation as a streaming-first experience. The user hears the beginning of their digest before the full episode is generated. The progress indicator reflects generation progress, not just network progress. Designing this required treating streaming as a design constraint from the start, not a technical detail to handle later.

Confidence and uncertainty as design primitives

Traditional UI has binary states: the system knows something or it does not. AI systems have probabilistic outputs — they are more or less confident, not right or wrong in a binary sense.

Design systems for AI-native products need visual languages for uncertainty:

- Confidence tiers — strong/moderate/low match signals with distinct visual treatments

- Source attribution — surface where information came from to help users calibrate trust

- Revision affordances — make it easy to tell the system it was wrong, without blame

This is not about adding disclaimer text. It is about building uncertainty into the visual grammar of the product.

The permission layer as a design primitive

AI-native products access more context than traditional products: your files, your calendar, your messages, your history. The design system needs a coherent language for consent and transparency.

This means components for:

- Context indicators — what data is this response based on?

- Permission requests — inline, contextual consent, not modal-heavy permission flows

- Data boundaries — clear visual language for "this is your data" vs. "this is public data"

Designers who leave the permission layer as a legal concern — buried in settings — will build AI products that users do not trust.